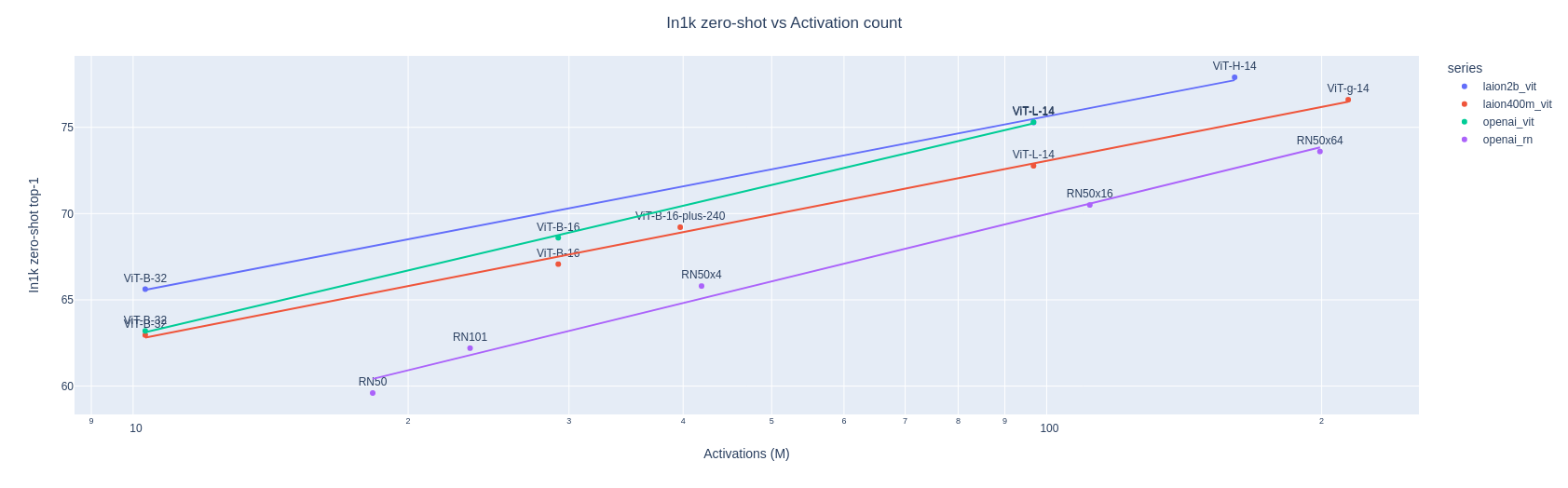

What's new in Finetuner 0.6?. New CLIP models and ease of use make… | by Alex C-G | Jina AI | Medium

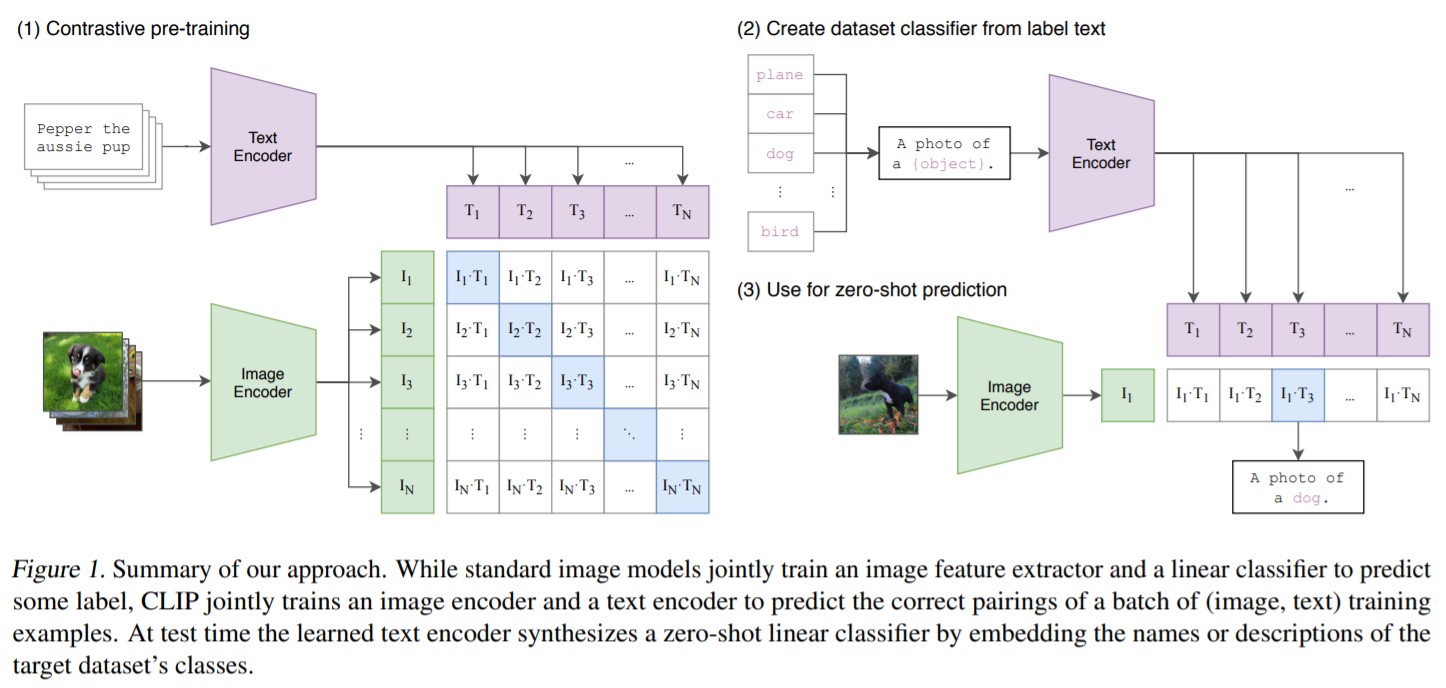

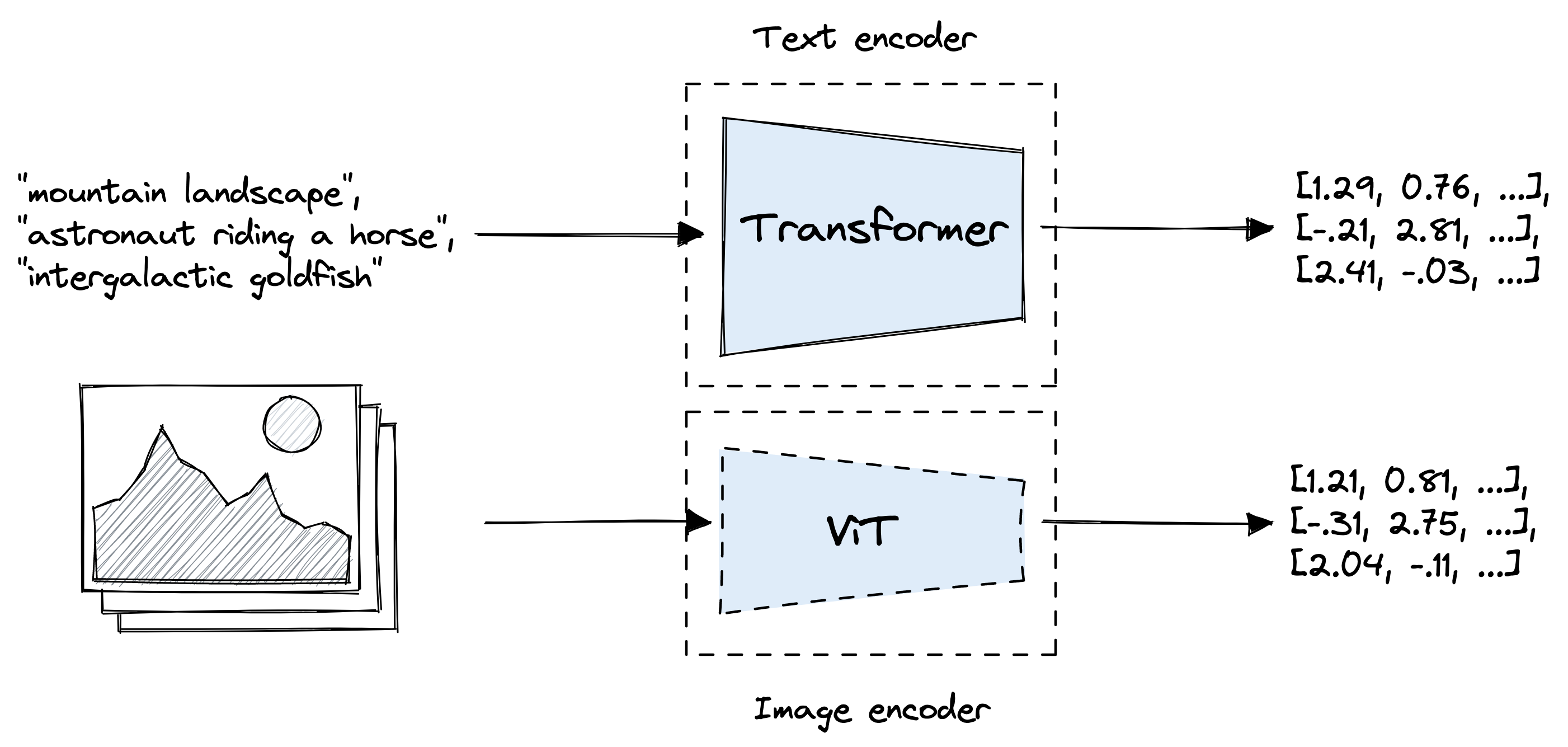

Computer vision transformer models (CLIP, ViT, DeiT) released by Hugging Face - AI News Clips by Morris Lee: News to help your R&D - Medium

Casual GAN Papers on Twitter: "OpenAI stealth released the model weights for the largest CLIP models: RN50x64 & ViT-L/14 Just change the model name from ViT-B/16 to ViT-L/14 when you load the